Riemannian geometry in elliptical distributions

Abstract

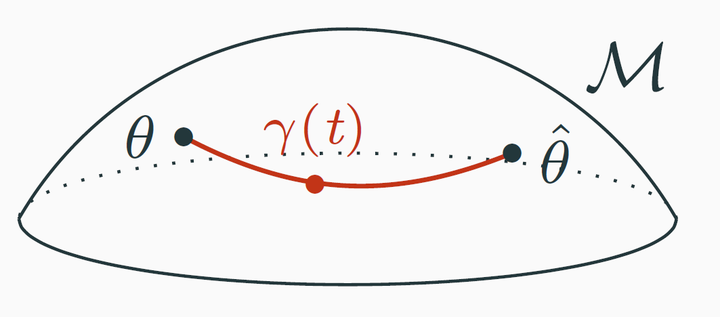

When dealing with an estimation problem, a Riemannian manifold can naturally appear by endowing the parameter space with the Fisher information metric. Interestingly, this yields a point of view that allows leveraging many tools from differential geometry. After a brief introduction about these concepts, we will present some recent works about the geometry of elliptical distributions and their practical implications. The exposition will be divided into three main axes: Riemannian optimization, Intrinsic Cramér-Rao bounds, and classification/clustering using Riemannian distances.

Date

Oct 7, 2021 1:00 PM

Event

Location

Rüdesheim